Abstract

COVID-19 accelerated a decade-long shift to remote work by normalizing working from home on a large scale. Indeed, 75% of US employees in a 2021 survey reported a personal preference for working remotely at least one day per week1, and studies estimate that 20% of US workdays will take place at home after the pandemic ends2. Here we examine how this shift away from in-person interaction affects innovation, which relies on collaborative idea generation as the foundation of commercial and scientific progress3. In a laboratory study and a field experiment across five countries (in Europe, the Middle East and South Asia), we show that videoconferencing inhibits the production of creative ideas. By contrast, when it comes to selecting which idea to pursue, we find no evidence that videoconferencing groups are less effective (and preliminary evidence that they may be more effective) than in-person groups. Departing from previous theories that focus on how oral and written technologies limit the synchronicity and extent of information exchanged4,5,6, we find that our effects are driven by differences in the physical nature of videoconferencing and in-person interactions. Specifically, using eye-gaze and recall measures, as well as latent semantic analysis, we demonstrate that videoconferencing hampers idea generation because it focuses communicators on a screen, which prompts a narrower cognitive focus. Our results suggest that virtual interaction comes with a cognitive cost for creative idea generation.

Main

In the wake of the COVID-19 pandemic, millions of employees were mandated to work from home indefinitely and virtually collaborate using videoconferencing technologies. This unprecedented shift to full-time remote employment demonstrated the viability of virtual work at a large scale, further legitimizing the growing work-from-home movement of the last decade. In a 2021 survey, 75% of US employees reported a personal preference for working from home at least one day a week, and 40% of employees indicated they would quit a job that required full-time in-person work1. In response, leading firms across various sectors, including Google, Microsoft, JPMorgan and Amazon, increased the flexibility of their post-pandemic work-from-home policies7, and research estimates that 20% of all US workdays will be conducted remotely once the pandemic ends2.

We explore how this shift towards remote work affects essential workplace tasks. In particular, collaborative idea generation is at the heart of scientific and commercial progress3,8. From the Greek symposium to Lennon and McCartney, collaborations have provided some of the most important ideas in human history. Until recently, these collaborations have largely required the same physical space because the existing communication technologies (such as letters, email and phone calls) limited the extent of information that is available to communicators and reduced the synchronicity of information exchange (media richness theory, social presence theory, media synchronicity theory4,5,6). However, recent advances in network quality and display resolution have ushered in a synchronous, audio-visual technology—videoconferencing—that conveys many of the same aural and non-verbal information cues as face-to-face interaction. If videoconferencing eventually closes the information gap between virtual and in-person interaction, the question arises whether this new technology could effectively replace in-person collaborative idea generation.

Here we show that, even if video interaction could communicate the same information, there remains an inherent and overlooked physical difference in communicating through video that is not psychologically benign: in-person teams operate in a fully shared physical space, whereas virtual teams inhabit a virtual space that is bounded by the screen in front of each member. Our data suggest that this physical difference in shared space compels virtual communicators to narrow their visual field by concentrating on the screen and filtering out peripheral visual stimuli that are not visible or relevant to their partner. According to previous research that empirically and neurologically links visual and cognitive attention9,10,11,12,13, as virtual communicators narrow their visual scope to the shared environment of a screen, their cognitive focus narrows in turn. This narrowed focus constrains the associative process underlying idea generation, whereby thoughts ‘branch out’ and activate disparate information that is then combined to form new ideas14,15,16,17. Yet the narrowed cognitive focus induced by the use of screens in virtual interaction does not hinder all collaborative activities. Specifically, idea generation is typically followed by selecting which idea to pursue, which requires cognitive focus and analytical reasoning18. Here we show that virtual interaction uniquely hinders idea generation—we find that videoconferencing groups generate fewer creative ideas than in-person groups due to narrowed visual focus, but we find no evidence that videoconferencing groups are less effective when it comes to idea selection.

Laboratory experiment

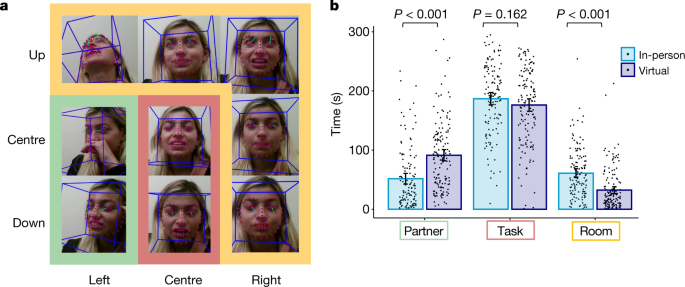

We first recruited 602 people to participate in an incentive-aligned laboratory study across two batches of data collection (see the ‘Laboratory experiment’ section of the Methods). The participants were randomly paired, and we instructed each pair to generate creative uses for a product for five minutes and then spend one minute selecting their most creative idea. Pairs were randomly assigned to work together on these tasks either in person or virtually (with their partner displayed by video across from them and the self-view removed; Fig. 1). We assessed ideation performance by counting both the total number of ideas and the subset of creative ideas generated by each pair14,16,19,20,21,22,23. We assessed idea selection quality using two different measures: (1) the ‘creativity score’ of the pair’s selected idea23 and (2) the ‘decision error score’—the difference in creativity score between the top scoring idea and the selected idea—where smaller values reflect a better decision23,24 (see the ‘Dependent measures’ section of the Methods).

In the laboratory experiment, we randomly assigned half of the pairs to work together in person and the other half to work together in separate, identical rooms using videoconferencing. The pairs in the virtual condition interacted with a real-time video of their partner’s face displayed on a 15-inch retina-display screen with no self-view. The image was taken during the first batch of data collection in the laboratory. Consent was obtained to use these images for publication.

Virtual pairs generated significantly fewer total ideas (mean (M) = 14.74, s.d. = 6.23) and creative ideas (M = 6.73, s.d. = 3.27) than in-person pairs (total ideas: M = 16.77, s.d. = 7.27, negative binomial regression, n = 301 pairs, b = 0.13, s.e. = 0.05, z = 2.72, P = 0.007, Cohen’s d = 0.30, 95% confidence interval (CI) = 0.07–0.53; creative ideas: M = 7.92, s.d. = 3.40, negative binomial regression, n = 301 pairs, b = 0.16, s.e. = 0.05, z = 3.14, P = 0.002, Cohen’s d = 0.36, 95% CI = 0.13–0.58; see Extended Data Table 1 for a summary of all of the analyses, Extended Data Table 2 for results from alternative models and Supplementary Information A for model assumption tests). By contrast, we found indications that virtual interaction might increase decision quality. Virtual pairs selected a significantly higher scoring idea (M = 4.28, s.d. = 0.81) and had a significantly lower decision error score (M = 0.78, s.d. = 0.67) compared with in-person pairs (selected idea: M = 4.08, s.d. = 0.84, linear regression, n = 292 pairs, b = 0.20, s.e. = 0.10, t290 = 2.04, P = 0.043, Cohen’s d = 0.24, 95% CI = 0.01–0.47; error score: M = 1.01, s.d. = 0.77, linear regression, n = 292 pairs, b = 0.23, s.e. = 0.08, t290 = 2.69, P = 0.007, Cohen’s d = 0.32, 95% CI = 0.08–0.55; see Supplementary Information B for model assumption tests). However, the effect of modality on decision quality attenuated when controlling for the number of ideas that each pair generated (selected idea: linear regression, n = 292 pairs, b = 0.18, s.e. = 0.10, t289 = 1.88, P = 0.062; error score: linear regression, n = 292 pairs, b = 0.20, s.e. = 0.08, t289 = 2.40, P = 0.017).

We next examined our hypothesis that virtual communication hampers idea generation because the bounded virtual space shared by pairs narrows visual scope, which in turn narrows cognitive scope. Specifically, in the second batch of data collection, 151 randomly assigned pairs generated creative uses for a product, either inperson or virtually, in a laboratory room containing ten props (five expected, such as folders; and five unexpected, such as a skeleton poster; see the ‘Stimulus 2: bubble wrap’ section of the Methods; Extended Data Fig. 1). We next captured visual focus in two ways. First, at the end of the study, the participants were asked to individually recall the props in the room and indicate them on a worksheet. Second, we recorded and extracted participants’ eye gaze throughout the task (Fig. 2; see the ‘Stimulus 2 process measures’ section in the Methods).

Pairs interacting virtually spent more time looking at their partner (Mvirtual = 91.4 s, s.d. = 58.3, Min-person = 51.7 s, s.d. = 52.2, linear mixed-effect regression, n = 270 participants (126 for in-person pairs and 144 for virtual pairs), b = 39.70, s.e. = 6.83, t139 = 5.81, P < 0.001, Cohen’s d = 0.71, 95% CI = 0.47–0.96) and less time looking at the surrounding room (Mvirtual = 32.4 s, s.d. = 34.8, Min-person = 61.0 s, s.d. = 43.1, linear mixed-effect regression, n = 270 participants, b = 28.8, s.e. = 5.10, t143 = 5.64, P < 0.001, Cohen’s d = 0.74, 95% CI = 0.49–0.99). Importantly, the time spent looking around the room predicted creative idea generation (negative binomial regression, n = 146 pairs (69 in-person pairs and 77 virtual pairs), b = 0.003, s.e. = 0.001, z = 3.14, P = 0.002) and mediated the effect of modality (in-person versus virtual) on idea generation (10,000 nonparametric bootstraps, 95% CI = 1.14–0.08). a, Example of how eye gaze during the task was categorized. b, Differences in the amount of time (seconds) by modality for looking at one’s partner (partner gaze) and looking around the room (room gaze). Data are from the second batch of data collection in the laboratory and are presented as mean ± 95% CIs. All statistical tests were two-sided, and no adjustments were made for multiple comparisons. Consent was obtained to use these images for publication.

Supporting our proposition that virtual pairs narrow their visual focus to their shared environment (that is, the screen), virtual pairs spent significantly more time looking directly at their partner (Mvirtual = 91.4 s, s.d. = 58.3, Min-person = 51.7 s, s.d. = 52.2, linear mixed-effect regression, n = 270 participants, b = 39.70, s.e. = 6.83, t139 = 5.81, P < 0.001, Cohen’s d = 0.71, 95% CI = 0.47–0.96), spent significantly less time looking at the surrounding room (Mvirtual = 32.4 s, s.d. = 34.8, Min-person = 61.0 s, s.d. = 43.1, linear mixed-effect regression, n = 270 participants, b = 28.75, s.e. = 5.10, t143 = 5.64, P < 0.001, Cohen’s d = 0.74, 95% CI = 0.49–0.99; Fig. 2) and remembered significantly fewer unexpected props in the surrounding room (Mvirtual = 1.53, s.d. = 1.38, Min-person = 1.95, s.d. = 1.38, Poisson mixed-effect regression, n = 302 participants, b = 0.25, s.e. = 0.10, z = 2.47, P = 0.014, Cohen’s d = 0.30, 95% CI = 0.08–0.53) than in-person pairs. There was no evidence that the time spent looking at the task differed by modality (Mvirtual = 176.1 s, s.d. = 64.0, Min-person = 186.8 s, s.d. = 60.2, linear mixed-effect regression, n = 270 participants, b = 10.65, s.e. = 7.60, t268 = 1.40, P = 0.162, Cohen’s d = 0.17, 95% CI = −0.07–0.41; see Supplementary Information C for model assumption tests).

Importantly, unexpected prop recall and gaze around the room were both significantly associated with an increased number of creative ideas (room recall: negative binomial regression, n = 151 pairs, b = 0.09, s.e. = 0.03, z = 2.82, P = 0.005; room gaze: negative binomial regression, n = 146 pairs, b = 0.003, s.e. = 0.001, z = 3.14, P = 0.002), and both of these measures independently mediated the effect of modality on idea generation (10,000 nonparametric bootstraps, recall: 95% CI = −0.61 to −0.01; gaze: 95% CI = −1.14 to −0.08; Extended Data Figs. 2 and 3). This combination of analyses converges on the view that virtual communication narrows visual focus, which subsequently hampers idea generation.

These findings provide causal evidence indicating that virtual (versus in-person) interaction hampers idea generation. However, this highly controlled laboratory paradigm may not fully capture the creative process as it unfolds in typical workplaces. Thus, to test the generalizability of the results, we replicated the study in an actual work context in five country sites of a large multinational telecommunications infrastructure company. We selected this field setting because it involved domain experts who are highly invested in the outcome, typically know their partners and regularly use virtual-communication technology in their work.

Field experiment

The company recruited 1,490 engineers to participate in an ideation workshop and randomly assigned the engineers into pairs collaborating either face-to-face or over videoconference (see the ‘Field experiment’ section of the Methods). The pairs generated product ideas for an hour and then selected and developed one idea to submit as a future product innovation for the company. The engineers who worked on the task virtually (M = 7.43, s.d. = 5.17) generated fewer total ideas than in-person pairs (M = 8.58, s.d. = 6.03, negative binomial mixed-effect regression, n = 745 pairs, b = 0.14, s.e. = 0.05, z = 3.13, P = 0.002, Cohen’s d = 0.21, 95% CI = 0.06–0.35). This pattern was replicated at all five sites (Table 1). In three of the workshops (n = 1,238 out of 1,490), engineers served as peer-evaluators and provided external ratings. In these sessions, we found that virtual engineer pairs generated both fewer total ideas (M = 7.42, s.d. = 5.19) and fewer creative ideas (M = 3.83, s.d. = 2.83) compared with in-person teams (total ideas: M = 8.66, s.d. = 6.14, negative binomial mixed-effect regression, n = 619 pairs, b = 0.15, s.e. = 0.05, z = 3.07, P = 0.002, Cohen’s d = 0.22, 95% CI = 0.06–0.38; creative ideas: M = 4.32, s.d. = 3.18, negative binomial mixed-effect regression, n = 619 pairs, b = 0.12, s.e. = 0.05, z = 2.15, P = 0.032, Cohen’s d = 0.16, 95% CI = 0.01–0.32).

By contrast, we found preliminary evidence that decision quality was positively impacted by virtual interaction. In-person teams had a significantly higher top-scoring idea in their generated idea pool (Mvirtual = 3.86, s.d. = 0.56, Min-person = 4.01, s.d. = 0.54, linear mixed-effect regression, n = 619 pairs, b = 0.14, s.e. = 0.04, t608 = 3.40, P < 0.001, Cohen’s d = 0.27, 95% CI = 0.11–0.43), but the selected idea did not significantly differ in quality by condition (Mvirtual = 3.05, s.d. = 0.71, Min-person = 3.04, s.d. = 0.78, linear mixed-effect regression, n = 591 pairs, b = 0.004, s.e. = 0.06, t582 = 0.07, P = 0.945, Cohen’s d = 0.01, 95% CI = −0.16–0.17). Furthermore, virtual pairs and in-person pairs significantly differ in their decision error score (Mvirtual = 0.81, s.d. = 0.76, Min-person = 0.99, s.d. = 0.86, Kruskal–Wallis rank sum, n = 591 pairs, χ21 = 5.30, P = 0.021, Cohen’s d = 0.22, 95% CI = 0.05–0.38, but this effect was attenuated when controlling for number of ideas (linear mixed-effect regression, n = 591 pairs, b = 0.11, s.e. = 0.06, t580 = 1.81, P = 0.071).

Alternative explanations

We examined several alternative explanations for the negative effect of virtual interaction on idea generation. A summary of our findings is shown in Extended Data Table 3.

Incremental ideas

We found that in-person communicators generate a greater number of total ideas and creative ideas compared with virtual pairs. Here, we examine the possibility that the additional ideas that in-person pairs generated could simply be incremental ideas that are topically similar to each other. Specifically, to test the link between virtual communication and associative thinking and whether in-person groups are truly engaging in divergent thinking rather than simply generating ideas ‘in the same vein’, we used latent semantic analysis to calculate how much each new idea semantically departed from the ‘thought stream’ of previous ideas25 in each pair. When ideation is divergent, ideas should depart from preceding ideas. In contrast to the alternative explanation that in-person teams simply generate many similar ideas, we found that, if anything, in-person pairs generated progressively more disconnected ideas over time relative to virtual pairs (Extended Data Fig. 4 and Supplementary Information D). These results are consistent with our proposed process that virtual communication constrains thinking relative to in-person pairs.

Trust and connection

Previous research found that feelings of connection and trust can facilitate team creativity26,27. To examine whether virtual groups experience reduced feelings of connection and whether reduced feelings of connection underlies the negative effect of virtual communication on idea generation, we used three complementary approaches.

First, we examined whether modality affects subjective feelings of connection using data collected through surveys at the end of the laboratory study. Consistent with previous research28, we found that participants did not report significant differences in feelings of similarity or liking, or in perceptions of how ‘in sync’ they were as a team by modality. Supporting these self-reported feelings, in an economic trust game, virtual and in-person pairs did not significantly differ in the amount of money they entrusted to their partner, although the amount of money entrusted to their partner was positively correlated with the number of creative ideas that the pair generated (details of the methods and analyses in this paragraph are provided in Supplementary Information E).

Second, we examined whether modality affects social behaviours (both verbal and nonverbal) by extracting behavioural data from unobtrusive recordings of laboratory participants during the idea-generation task. Specifically, we quantified social behaviour using two methods: (1) judges blinded to the condition and hypotheses watched video clips of the participants and scored the extent to which they observed 32 social behaviours; and (2) we transcribed each pair’s conversation and ran the transcripts through a linguistic analysis database29. Across these analyses, we found that that virtual and in-person pairs significantly differed in only 4 out of 32 observer-rated behaviours and the word usage of only 3 out of 80 social and cognitive linguistic categories in the database. Furthermore, we found that controlling for these differences did not significantly attenuate the negative effect of virtual interaction on idea generation (see Extended Data Table 4 for a summary and Supplementary Information F and G for details of the methods and analyses in this paragraph).

Finally, we examined whether modality affects mimicry, a subconscious indicator of connection30. Specifically, we assessed the extent to which pairs exhibited linguistic mimicry31 using each pair’s transcripts and the extent to which pairs exhibited facial mimicry30,32 using facial expressions extracted from the videos of their interactions33. We found that in-person and virtual pairs did not significantly differ in the extent to which they exhibited either form of mimicry (see Supplementary Information H for details of the methods and analyses in this paragraph).

These results demonstrate how similar video interactions can be to in-person communication. Across three complementary approaches (subjective feelings of closeness, verbal and non-verbal behaviours, and mimicry), we found little evidence that communication modality affects social connection. Furthermore, the significant negative effect of virtual interaction on idea generation holds when controlling for these measures. Thus, it seems improbable that differences in social connection or social behaviour by interaction modality are a main contributor to the results that we report.

Conversation coordination

Although video and in-person interaction contains many of the same informational cues, one important distinction between these modalities is the ability to engage in eye contact. When two individuals look at each other’s eyes on the screen, it appears to neither partner that the other is looking into their eyes, which could affect communication coordination34. Indeed, previous research found that virtual pairs can experience difficulty in determining who should speak next and when35 and, in our studies, virtual pairs reported struggling more with communication coordination. We used three complementary metrics from the laboratory study transcripts to examine whether communication coordination friction contributes to our effect: the number of words spoken, the number of times the transcriber observed ‘crosstalk’ during the interaction (which reflects when two people speak over each other) and the number of speaker switches (back-and-forth) that each pair exhibited. The number of words spoken did not significantly differ by modality, but virtual groups engaged in significantly fewer speaker switches compared with in-person groups and significantly less crosstalk. However, controlling for these measures did not significantly attenuate the effect of modality on number of creative ideas generated (see Supplementary Information I for details of the methods and analyses in this paragraph). Together, although communication coordination is altered by the modality of interaction, it does not appear to fully explain the effect of virtual interaction on idea generation.

Interpersonal processes

In addition to social connection and conversation coordination, previous research has identified a range of interpersonal processes that can affect group idea generation: fear of evaluation (and resulting self-censorship), dominance, social facilitation, social loafing, social sensitivity, perceptions of performance and production blocking. We examined whether interaction modality alters any of these processes during idea generation and whether controlling for these processes meaningfully attenuated our documented negative effect of virtual collaboration on ideation (see Supplementary Information J for a relevant literature and details of the methods and analyses). In these supplementary analyses, we found that our observed effect was robust to each of these alternative explanations.

Policy implications

Our results suggest that there is a unique cognitive advantage to in-person collaboration, which could inform the design of remote work policies. However, when determining whether or not to use virtual teams, many additional factors necessarily enter the calculus, such as the cost of commute and real estate, the potential to expand the talent pool, the value of serendipitous encounters36, and the difficulties in managing time zone and regional cultural differences37. Although some of these factors are intangible and more difficult to quantify, there are concrete and immediate economic advantages to virtual interaction (such as a reduced need for physical space, reduced salary for employees in areas with a lower cost of living and reduced business travel expenses). To capture the best of both worlds, many workplaces are planning to or currently combine in-person and virtual interaction. Indeed, a 2021 survey suggests that American employees will work from home around 20% of the time after the pandemic2. Our results indicate that, in these hybrid setups, it might make sense to prioritize creative idea generation during in-person meetings. However, it is important to caution that our results document only the cognitive cost of virtual interaction. When it comes to deciding the extent to which a firm should use virtual teams, a more comprehensive analysis factoring in other industry and context-specific costs that the firm might face is needed. We leave this important issue to future research.

Extensions and generalizability

Our studies examined the effect of virtual versus in-person interaction in the context of randomly assigned pairs (a context relevant to practice; Supplementary Information K). Here, we explore whether the negative effect of virtual interaction on idea generation generalizes to more established and/or larger teams. To investigate this, we first examined the relevance to established teams by testing whether familiarity moderated the effect of modality on ideation in our field data. We found no evidence that the negative effect of virtual interaction changed depending on level of familiarity between members of the pair (Supplementary Information L). This hints that the effect of virtual interaction on idea generation might extend to established teams. Second, we examined the implications of our effect on larger teams by manipulating the virtual group size in an online experiment. Replicating previous findings on the counterproductivity of larger in-person ideation teams21, virtual pairs outperformed larger virtual groups (see Extended Data Fig. 5 and Extended Data Table 5 for summaries of the set-up and our findings, and Supplementary Information M for details of the methods and analysis). Putting our findings together with previous findings, research suggests that in-person pairs outperform larger in-person groups and virtual pairs, and virtual pairs outperform larger virtual groups. Thus, our substantive recommendation is, cost permitting, to generate ideas in pairs and in person.

In our laboratory study, we used 15.6-inch MacBook Pro retina display laptops because, at the time of data collection (2016 to 2021), laptops were the most common hardware used in videoconferencing, and 15.6 inch was the most prevalent screen size offered in the market (Supplementary Information N). We examined whether our effect would generalize to larger screen sizes in the online study described above. Specifically, we leveraged participants’ natural variance in screen size to investigate the role of screen size in virtual idea generation. We found no evidence that screen size is associated with idea generation performance in virtual pairs (even when controlling for income, comfort with videoconferencing and time spent on the computer; Supplementary Information M). This suggests that, at least in the current range available on the market, larger screen sizes would not ameliorate the negative effect of virtual interaction on ideation.

Finally, our empirical context involved both novices (college undergraduates) and experts (engineering teams) and examined two types of creativity tasks: a low-complexity task in the laboratory (generating alternative uses for a product) and a high-complexity task in the field (identifying problems that customers might have as well as generating solutions the firm could offer). Although it is reassuring that we demonstrate our effects with two different participant pools that vary in their creativity training, task type and domain expertise, our contexts are representative of only a subset of innovation teams. Future research is needed to examine the moderating factor of group heterogeneity (Supplementary Information O and P) and extensions to other creative industries (Supplementary Information Q).

Methods

Laboratory experiment

Following the methodological recommendation38 of “generaliz[ing] across stimuli by replicating the study across different stimuli within a single experiment”, we collected our laboratory data with stimulus replicates in two batches. Where possible, we combined the data from the two batches to increase statistical power. When the two batches of data were combined, our power to detect a difference in conditions at our effect size was 89%. Below, we outline the methods for each stimulus batch.

Stimulus 1: frisbee

Procedure

Three hundred participants (202 female, 95 male, Mage = 26.1; s.d.age = 8.61; three participants did not complete the survey and are therefore missing demographic information) from a university student and staff pool in the United States participated in the study in exchange for US$10. We posted timeslots in an online research portal that allowed each participant to enroll anonymously into a pair. The participants provided consent before beginning the study. This study was approved by the Stanford University Human Subjects Ethics Board (protocol 35916). The laboratory study was conducted by university research assistants blinded to the hypothesis who were not present during the group interaction.

On arrival, the pairs were informed that their first task was to generate creative alternative uses for a Frisbee and that their second task was to select their most creative idea. These tasks were incentive-aligned: each creative idea that was generated (as scored by outside judges) earned the pair one raffle ticket for a US$200 raffle, and selecting a creative idea earned the pair five additional raffle tickets. Half of the teams (n = 75) learned that they would be working together on the task in the same room, whereas the other half (n = 75) were told that they would be working in separate rooms and communicating using video technology (WebEx, v.36.6–36.9). Groups were assigned in an alternating order, such that the first group was in-person, the second group was virtual and so on. This ensured an equal and unbiased recruitment of each condition.

Before being moved to the task room(s), one participant was randomly selected to be the typist (that is, to record the ideas during the idea-generation stage and indicate the selected idea in the idea-selection stage for the pair) by drawing a piece of paper from a mug. In both communication modalities, each team member had an iPad with a blank Google sheet open (accessed in 2016). The typist had a wireless keyboard and editing capabilities, whereas the other team member could only view the ideas on their iPad. Thus, only the typist could record the generated ideas and select the pair’s top idea, but both members had equal information about the team’s performance (that is, the generated ideas and the selected idea). In-person pairs sat at a table across from each other. Virtual pairs sat at identical tables in separate rooms with their partner displayed on video across from them. The video display was a full-screen video stream of only their partner (the video of the self was not displayed) on a 15-inch retina-display MacBook Pro.

Each pair generated ideas for 5 min and spent 1 min selecting their most creative idea. They indicated their top creative idea by putting an asterisk next to the idea on the Google sheet. Nine groups did not indicate their top idea on the Google sheet; in the second batch of data collection, we used an online survey that required a response to prevent this issue. Finally, as an exploratory measure, each pair was given 5 min to evaluate each of their ideas on a seven-point scale (1 (least creative) to 7 (most creative)).

Once pairs completed both the idea-generation and the idea-selection task, each team member individually completed a survey on Qualtrics (accessed in 2016) in a separate room.

Stimulus 2: bubble wrap

Procedure

Participants (334) from a university student and staff pool in the United States participated in the study in exchange for US$15. We also recruited 18 participants from Craigslist in an effort to accelerate data collection. However, the students reported feeling uncomfortable, and idea generation performance dropped substantially with student–craigslist pairs, so we removed these pairs from the analysis. Our final participant list did not overlap with participants in the first batch of data collection in the laboratory. The participants provided consent before beginning the study. This study was approved by the Stanford University Human Subjects Ethics Board (protocol 35916). The laboratory study was conducted by university research assistants blinded to the hypothesis who were not present during the group interaction.

We a priori excluded any pairs who experienced technical difficulties (such as screen share issues, audio feedback or dropped video calls) and aimed to collect 150 pairs in total. Our final sample consisted of 302 participants (177 females, 119 males, 2 non-binary, Mage = 23.5, s.d.age = 7.09; we are missing demographic and survey data from four of the participants). Mimicking the design of the first batch of data collection in the laboratory, pairs generated uses for bubble wrap for 5 min and then spent 1 min selecting their most creative idea. As before, half of the teams learned that they would be working together on the task in the same room (n = 74), whereas the other half (n = 77) were told that they would be working in separate (but identical) rooms and communicating using video technology (Zoom v.3.2). The groups were assigned in an alternating order, such that the first group was in-person, the second group was virtual and so on. This ensured an equal and unbiased recruitment of each condition. Again, one partner was randomly assigned to be the typist. The tasks were incentivized using the same structure as the first batch of collection.

For in-person pairs, each participant had a 15-inch task computer directly in front of them with their partner across from them and situated to their right. For virtual pairs, each participant had two 15-inch computers: a task computer directly in front of them and computer displaying their partner’s face to their right (again, self-view was hidden). This set-up enabled us to unobtrusively measure gaze by using the task computer to record each participant’s face during the interaction: in both conditions, the task was directly in front of each participant and the partner was to each participant’s right.

In contrast to the first batch of data collection, we used Qualtrics (accessed in 2018) to collect task data. Pairs first generated alternative uses for bubble wrap. After 5 min, the page automatically advanced. We next asked each pair to select their most creative idea and defined a creative idea as both novel (that is, different from the normal uses of bubble wrap) and functional (that is, useful and easy to implement). The pair had exactly 1 min to select their most creative idea. After 1 min, the page automatically advanced. If the pair still had not selected their top idea, the survey returned the selection page and marked that the team went over time. Virtual and in-person pairs did not significantly differ in the percentage of teams that went over time (that is, took longer than a minute); 17.6% of in-person pairs and 16.9% of virtual pairs went over time (Pearson’s χ21 = 0.001, P = 0.926). Finally, as exploratory measures, each pair (1) selected an idea from another idea set and then (2) evaluated how novel and functional their selected idea was on a seven-point scale.

Importantly, in both conditions, the task rooms were populated with ten props: five expected props (that is, props consistent with a behavioural laboratory schema (a filing cabinet, folders, a cardboard box, a speaker and a pencil box)) and five unexpected props (a skeleton poster, a large house plant, a bowl of lemons, blue dishes and yoga ball boxes; Extended Data Fig. 1, inspired by ref. 39). Immediately after the task, we moved the participants into a new room, separated them and asked the participants to individually recreate the task room on a sheet of paper39.

After the room recall, to measure social connection, each participant responded to an incentive-aligned trust game40. Specifically, each participant read the following instructions: “Out of the 150 groups in this study, 15 groups will be randomly selected to win $10. This is a REAL bonus opportunity. Out of the $10, you get the choose how much to share with your partner in the study. The amount of money you give to your partner will quadruple, and then your partner can choose how much (if any) of that money they will share back with you.”

The participants then selected how much money they would entrust to their partner in US$1 increments, between US$0 and US$10. Finally, the participants then completed a survey with exploratory measures.

Dependent measures

Measure of idea generation performance

Researchers conducting the analyses were not blinded to the hypothesis and all data were analysed using R (v.4.0.1). We first computed total idea count by summing the total number of ideas generated by each pair. Then, for the key dependent measure of creative ideas, we followed the consensual assessment technique41 and had two undergraduate judges (from the same population and blind to condition and hypothesis) evaluate each idea on the basis of novelty. Specifically, each undergraduate judge was recruited by the university’s behavioural laboratory to help code data from a study. Each judge was given an excel sheet with all of the ideas generated by all of the participants in a randomized order and was asked to evaluate each idea for novelty on a scale of 1 (not at all original/innovative/creative) to 7 (very original/innovative/creative) in one column of the excel sheet and to evaluate each idea for value on a scale of 1 (not at all useful/effective/implementable) to 7 (very useful/effective/implementable) in an adjacent column. Anchors were adopted from ref. 42.

Judges demonstrated satisfactory agreeability (stimulus 1: αnovelty = 0.64, αvalue = 0.68, stimulus 2: αnovelty = 0.75, αvalue = 0.67) on the basis of intraclass correlation criteria delineated previously43. The scores were averaged to produce one creativity score for each idea. We computed the key measure of creative idea count by summing the number of ideas that each pair generated that surpassed the average creativity score of the study (that is, the grand mean of the whole study for each stimulus across the two conditions). Information about average creativity is provided in Supplementary Information R.

Measure of selection performance

We followed previous research and calculated idea selection using two different methods23,24. First, we examined whether the creativity score of the idea selected by each pair differed by communication modality (both with and without controlling for the creativity score of the top idea). Second, we calculated the difference between the creativity score of the top idea and the creativity score of the selected idea. A score of 0 indicates that they selected their top-scoring idea, and a higher score reflects a poorer decision.

Stimulus 2 process measures

Room recall

The room contained five expected props and five unexpected props. If virtual participants are more visually focused, they should recall fewer props and, specifically, the unexpected props that cannot be guessed using the schema of a typical behavioural laboratory. To test this, we counted the number of total props (out of the ten) and unexpected props (out of five) that participants drew and labelled when sketching the room from memory. We did not include other objects in the room (such as the computer and door) in our count.

Eye gaze

We used OpenFace (v.2.2.0), an opensource software package, to automatically extract and quantify eye gaze angles using the recording of each participant taken from their task computer34. From there, we had at least two independent coders (blinded to the hypothesis and condition) look at video frames of eye gaze angles extracted from the software and indicate the idiosyncratic threshold at which each participant’s eye gaze shifted horizontally (from left to centre, and centre to right, α = 0.98) and vertically (up to centre, and centre to down, α = 0.85). Out of 302 participants, 275 videos of participants yielded usable gaze data. Nine videos were not saved, six videos cut off participants’ eyes, four videos were too dark to reliably code, two videos were corrupted and could not load, two videos contained participants with glasses that resulted in eye gaze misclassification, two videos (one team) did not have their partner to their right and two videos were misclassified by OpenFace.

Using these thresholds, we calculated how often each participant looked at their partner, the task and the surrounding room. To repeat, the recording came from the task computer, and the partner was always situated to the participant’s right (or from the perspective of a person viewing the video, to the left). As human coders marked the thresholds (blind to the hypothesis and condition), we report the categorizations from the perspective of an observer of the video. Specifically, looking either (1) horizontally to the left and vertically centre or (2) horizontally to the left and vertically down was categorized as ‘partner gaze’; looking either (1) horizontally centre and vertically centre or (2) horizontally centre and vertically down was categorized as ‘task gaze’; and the remaining area was categorized as ‘room gaze’, which encompassed looking (1) horizontally left and vertically upward, (2) horizontally centre and vertically upward, (3) horizontally right and vertically upward, (4) horizontally right and vertically centre, and (5) horizontally right and vertically down (Fig. 2; consent was obtained to use these images for publication). We chose this unobtrusive methodology instead of more cumbersome eye-tracking hardware to maintain organic interactions—wearing strange headgear could make participants consciously aware of their eye gaze or change the natural dynamic of conversation.

We excluded six videos that were less than 290 s long. The effects do not change in significance when these videos are included in the analyses. With these excluded videos, as before, virtual groups spent significantly more time looking at their partner (Mvirtual = 90.6 s, s.d. = 58.3, Min-person = 52.6 s, s.d. = 54.3, linear mixed-effect regression, n = 276 participants, b = 38.00, s.e. = 6.95, t139 = 5.46, P < 0.001, Cohen’s d = 0.68, 95% CI = 0.43–0.92) and spent significantly less time looking at the surrounding room (Mvirtual = 32.4 s, s.d. = 34.6, Min-person = 60.9 s, s.d. = 43.7, linear mixed-effect regression, n = 276 participants, b = 28.44, s.e. = 4.96, t145 = 5.74, P < 0.001, Cohen’s d = 0.73, 95% CI = 0.48–0.98; Fig. 2). There was again no evidence that time spent looking at the task differed by modality (Mvirtual = 176 s, s.d. = 63.6, Min-person = 184 s, s.d. = 63.0, linear mixed-effect regression, n = 276 participants, b = 7.39, s.e. = 7.63, t274 = 0.97, P = 0.334, Cohen’s d = 0.12, 95% CI = −0.12–0.35). Importantly, gaze around the room was significantly associated with an increased number of creative ideas (negative binomial regression, n = 146 pairs, b = 0.003, s.e. = 0.001, z = 3.10, P = 0.002). Furthermore, gaze around the room mediated the effect of modality on idea generation (5,000 nonparametric bootstraps, 95% CI = 0.05 to 1.15).

Data availability

The data (raw and cleaned) collected by the research team and reported in this Article and its Supplementary Information are available on Research Box (https://researchbox.org/282), except for the video, audio recordings and transcripts of participants, because we do not have permission to share the participants’ voices, faces or conversations. The cleaned summary data for the field studies are available in the same Research Box, but the raw data must be kept confidential, as these data are the intellectual property of the company. The Linguistic Analysis database is available online (https://liwc.wpengine.com/). Extended Data Tables 1–5 and Extended Data Figs. 2 and 3 are summary tables and figures, and the raw data associated with these tables are on Research Box (https://researchbox.org/282). Source data are provided with this paper.

Code availability

All custom code used to clean and analyse the data is available at Research Box (https://researchbox.org/568). The Linguistic Analysis database is available online (https://liwc.wpengine.com/). OpenFace is available at GitHub (https://github.com/TadasBaltrusaitis/OpenFace).

References

-

Barrero, J. M., Bloom, N. & Davis, S. J. Don’t force people to come back to the office full time. Harvard Business Review (24 August 2021).

-

Barrero, J. M., Bloom, N. & Davis, S. Why Working from Home Will Stick. NBER Working paper (2021); http://www.nber.org/papers/w28731.pdf.

-

Wuchty, S., Jones, B. F. & Uzzi, B. The increasing dominance of teams in production of knowledge. Science 316, 1036–1039 (2007).

-

Daft, R. L. & Lengel, R. H. Organizational information requirements, media richness and structural design. Manage. Sci. 32, 554–571 (1986).

-

Short, J., Williams, E. & Christie, B. The Social Psychology of Telecommunications (John Wiley & Sons, 1976).

-

Dennis, A. R., Fuller, R. M. & Valacich, J. S. Media, tasks, and communication processes: a theory of media synchronicity. MIS Q. 32, 575–600 (2008).

-

Kelly, J. Here are the companies leading the work-from-home revolution. Forbes (24 May 2020).

-

Salter, A. & Gann, D. Sources of ideas for innovation in engineering design. Res. Pol. 32, 1309–1324 (2003).

-

Anderson, M. C. & Spellman, B. A. On the status of inhibitory mechanisms in cognition: memory retrieval as a model case. Psychol. Rev. 102, 68–100 (1995).

-

Derryberry, D. & Tucker, D. M. In The Heart’s Eye: Emotional Influences in Perception and Attention (eds Niedenthal, P. M. & Kitayama, S.) 167–196 (Academic, 1994).

-

Posner, M. I. Cognitive Neuroscience of Attention (Guilford Press, 2011).

-

Rowe, G., Hirsh, J. B. & Anderson, A. K. Positive affect increases the breadth of attentional selection. Proc. Natl Acad. Sci. USA 104, 383–388 (2007).

-

Friedman, R. S., Fishbach, A., Förster, J. & Werth, L. Attentional priming effects on creativity. Creativity Res. J. 15, 277–286 (2003).

-

Mehta, R. & Zhu, R. J. Blue or red? Exploring the effect of color on cognitive task performances. Science 323, 1226–1229 (2009).

-

Mednick, S. The associative basis of the creative process. Psychol. Rev. 69, 220–232 (1962).

-

Nijstad, B. A. & Stroebe, W. How the group affects the mind: a cognitive model of idea generation in groups. Pers. Soc. Psychol. Rev. 10, 186–213 (2006).

-

Jung, R. E., Mead, B. S., Carrasco, J. & Flores, R. A. The structure of creative cognition in the human brain. Front. Hum. Neurosci. 7, 1–13 (2013).

-

Simon, H. A. A behavioral model of rational choice. Q. J. Econ. 69, 99–118 (1955).

-

Diehl, M. & Stroebe, W. Productivity loss in brainstorming groups: toward the solution of a riddle. J. Pers. Soc. Psychol. 3, 497–509 (1987).

-

Osborne, A. F. Applied Imagination (Scribner, 1957).

-

Mullen, B., Johnson, C. & Salas, E. Productivity loss in brainstorming groups: a meta-analytic integration. Basic Appl. Soc. Psychol. 12, 3–23 (1991).

-

Luo, L. & Toubia, O. Improving online idea generation platforms and customizing the task structure on the basis of consumers’ domain-specific knowledge. J. Mark. 79, 100–114 (2015).

-

Keum, D. D. & See, K. E. The influence of hierarchy on idea generation and selection in the innovation process. Organ. Sci. 28, 653–669 (2017).

-

Berg, J. M. Balancing on the creative highwire: forecasting the success of novel ideas in organizations. Admin. Sci. Q. 61, 433–468 (2016).

-

Gray, K. et al. “Forward Flow”: a new measure to quantify free thought and predict creativity. Am. Psychol. 74, 539–55416 (2019).

-

West, M. A. The social psychology of innovation in groups. in Innovation and Creativity at Work (eds West, M. A. & Farr, J. L.) 309–322 (John Wiley & Sons, 1990).

-

Nemiro, J. E. Connection in creative virtual teams. J. Behav. Appl. Manage. 2, 93–115 (2016).

-

Sprecher, S. Initial interactions online-text, online-audio, online-video, or face-to-face: effects of modality on liking, closeness, and other interpersonal outcomes. Comput. Hum. Behav. 31, 190–197 (2014).

-

Tausczik, Y. R. & Pennebaker, J. W. The psychological meaning of words: LIWC and computerized text analysis methods. J. Lang. Soc. Psychol. 29, 24–54 (2010).

-

Chartrand, T. L. & van Baaren, R. Human Mimicry. In Advances in Experimental Social Psychology Vol. 41 (ed. Zanna, M. P.) 219–274 (Academic Press, 2009).

-

Ireland, M. E. et al. Language style matching predicts relationship initiation and stability. Psychol. Sci. 22, 39–44 (2011).

-

Kulesza, W. M. et al. The face of the chameleon: the experience of facial mimicry for the mimicker and the mimickee. J. Soc. Psychol. 155, 590–604 (2015).

-

Amos, B., Ludwiczuk, B. & Satyanarayanan, M. Openface: A general-purpose face recognition library with mobile applications. CMU School of Computer Science 6.2 (2016).

-

Jokinen, K., Nishida, M. & Yamamoto, S. On eye-gaze and turn-taking. In Proc. 2010 Workshop on Eye Gaze in Intelligent Human Machine Interaction 118–123 (ACM Press, 2010); https://doi.org/10.1145/2002333.2002352.

-

Boland, J. E., Fonseca, P., Mermelstein, I. & Williamson, M. Zoom disrupts the rhythm of conversation. J. Exp. Psychol. https://doi.org/10.1037/xge0001150 (2021).

-

Hinds, P. J. & Mortensen, M. Understanding conflict in geographically distributed teams: the moderating effects of shared identity, shared context, and spontaneous communication. Organ. Sci. 16, 290–307 (2005).

-

Mortensen, M. & Hinds, P. J. Conflict and shared identity in geographically distributed teams. Int. J. Confl. Manage. 12, 212–238 (2001).

-

Kenny, D. A. Quantitative Methods for social psychology. In Handbook of Social Psychology Vol. 1 (eds Lindzey, G. & Aronson, E.) 487–508 (Random House, 1985).

-

Brewer, W. F. & Treyens, J. C. Role of schemata in memory for places. Cogn. Psychol. 13, 207–230 (1981).

-

King-Casas, B. Getting to know you: reputation and trust in a two-person economic exchange. Science 308, 78–83 (2005).

-

Amabile, M. Social psychology of creativity: a consensual assessment technique. J. Pers. Soc. Psychol. 43, 997–1013 (1982).

-

Moreau, C. P. & Dahl, D. W. Designing the solution: the impact of constraints on consumers’ creativity. J. Consum. Res. 32, 13–22 (2005).

-

Cicchetti, D. V. & Sparrow, S. A. Developing criteria for establishing interrater reliability of specific items: applications to assessment of adaptive behavior. Am. J. Ment. Def. 86, 127–137 (1981).

Acknowledgements

We thank B. Ginn, N. Hall, S. Atwood and M. Nelson for help with data collection; M. Jiang, Y. Mao and B. Chivers for help with data processing; G. Eirich for statistical advice; M. Brucks, K. Duke and A. Galinsky for comments and insights; and J. Pyne and N. Itzikowitz for their partnership.

Author information

Affiliations

Contributions

M.S.B. supervised data collection by research assistants at the Stanford Behavior Lab in 2016–2021. M.S.B. and J.L. jointly supervised data collection by the corporate partner at the field sites. These data were analysed by M.S.B. and discussed jointly by both of the authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature thanks Brian Uzzi and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 Materials and example data for room recall measure in the second batch of data collection in the lab.

(a) Photo demonstrating the prop placement in the lab room. Five props were expected (props consistent with a behavioural lab schema): a filing cabinet, folders, a cardboard box, a speaker, and a pencil box; and five props were unexpected (props inconsistent with a behavioural lab schema): a skeleton poster, a large house plant, a bowl of lemons, blue dishes, and yoga ball boxes. (b) Participant example of the data materials. After leaving the lab space, participants recreated the lab room on a piece of paper containing the basic layout of the room and then numbered each element. We then asked participants to list the identity of each element on a Qualtrics survey. A condition- and hypothesis-blind research assistant categorized each listing into one of the ten props and removed any other responses. We then counted how many expected and unexpected props were remembered by each participant.

Extended Data Fig. 2 Room recall mediates the effect of communication modality on idea generation.

This mediation model demonstrates that virtual participants remembered significantly fewer unexpected props in the experiment room and that this explains the effect of virtual interaction on creative idea generation. We ran an OLS regression for the a-link (communication modality predicting average recall of unexpected items per pair, n = 151 pairs, OLS regression, b = 0.42, s.e. = 0.17, t149 = 2.44, P = 0.016), and we ran a Negative Binomial regression for the b-link (number of average unexpected items recalled per pair predicting number of creative ideas generated, n = 151 pairs, Negative Binomial regression, b = 0.08, s.e. = 0.03, z = 2.48, P = 0.013). A mediation analysis with 10,000 nonparametric bootstraps revealed that recall of the room mediated the effect of modality on creative idea generation (95% confidence intervals of the indirect effect = −0.61 to −0.01). The total effect of modality condition on number of creative ideas generated was significant (n = 151 pairs, Negative Binomial regression, b = 0.15, s.e. = 0.07, z = 2.18, P = 0.030), but this effect was attenuated to non-significance when accounting for the unexpected recall mediator (n = 151 pairs, Negative Binomial regression, b = 0.12, s.e. = 0.07, z = 1.73, P = 0.083). See Supplementary Information C for model assumption tests of normality and heteroskedasticity. All tests are two-tailed and there were no adjustments made for multiple comparisons (for a discussion of our rationale, see Supplementary Information S).

Extended Data Fig. 3 Gaze mediates the effect of communication modality on idea generation.

This mediation model demonstrates that virtual participants spent less time looking around the room and that this explains the effect of virtual interaction on creative idea generation. We ran an OLS regression for the a-link (communication modality predicting average room gaze per pair, n = 146 pairs, OLS regression, b = −29.1, s.e. = 5.1, t144 = 5.69, P < 0.001), and we ran a Negative Binomial regression for the b-link (average room gaze per pair predicting number of creative ideas generated, n = 146 pairs, Negative Binomial regression, b = 0.003, s.e. = 0.001, z = 2.34, P = 0.020). A mediation analysis with 10,000 nonparametric bootstraps revealed that recall of the room mediated the effect of modality on creative idea generation (95% confidence intervals of the indirect effect = −1.14 to −0.08). The total effect of modality condition on number of creative ideas generated was significant (n = 146 pairs, Negative Binomial regression, b = 0.17, s.e. = 0.07, z = 2.36, P = 0.019), but this effect was attenuated to non-significance when accounting for the room gaze mediator (n = 146 pairs, Negative Binomial regression, b = 0.09, s.e. = 0.08, z = 1.20, P = 0.231). See Supplementary Information C for model assumption tests of normality and heteroskedasticity. All tests are two-tailed and there were no adjustments made for multiple comparisons (for a discussion of our rationale, see Supplementary Information S).

Extended Data Fig. 4 The effect of virtual communication on forward flow across the progression of idea generation.

There was a significant interaction between modality and the position of an idea in the pair’s idea sequence on forward flow score across all studies (linear mixed-effect regression, n = 9966 idea scores, interaction term: b = −0.01, s.e. = 0.01, t358 = −2.09, P = 0.038). At the beginning of the idea generation task, ideas generated by in-person and virtual pairs were similarly connected to past ideas generated by each pair. However, by the eleventh idea, ideas generated by in-person pairs began to exhibit significantly more forward flow (that is, the ideas were less semantically associated) compared to those of virtual pairs (linear mixed-effect regression, n = 9966 idea scores, simple effect of modality on forward flow at the 11th idea: b = −0.12, s.e. = 0.06, t621 = −2.00, P = 0.047). Thus, in-person pairs generate progressively more disconnected ideas relative to virtual pairs. See Supplementary Information D for model assumption tests of normality and heteroskedasticity. We truncated the graph at 30 ideas to provide the most accurate representation of the majority of the data. All tests are two-tailed and there were no adjustments made for multiple comparisons (for a discussion of our rationale, see Supplementary Information S).

Extended Data Fig. 5 Set-up for group size virtual study.

In the virtual-only study, we randomly assigned participants into groups of 2 or 4 people. Participants worked on a google sheet and were instructed to set up their screen such that half of their screen was the task and the other half of the screen was their zoom window. The self-view was hidden, and participants either saw one partner (2-person condition), or three teammates (4-person condition). Consent was obtained to use these images for publication.

Supplementary information

Supplementary Methods

Sections A–C test the model assumptions. Sections D–J examine the alternative explanations mentioned in the main text and are summarized in Extended Data Figs. 7 and 8. Sections K–M detail additional data and tests examining generalizability. Sections P–Q discuss the limitations. Section R includes secondary analyses in the methods.

Source data

Rights and permissions

About this article

Cite this article

Brucks, M.S., Levav, J. Virtual communication curbs creative idea generation. Nature (2022). https://ift.tt/hwlaDkG

-

Received:

-

Accepted:

-

Published:

-

DOI: https://ift.tt/hwlaDkG

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

"Idea" - Google News

April 27, 2022 at 11:33PM

https://ift.tt/qubLZQf

Virtual communication curbs creative idea generation - Nature.com

"Idea" - Google News

https://ift.tt/wmY1q90

https://ift.tt/H9ePt4v

Bagikan Berita Ini

0 Response to "Virtual communication curbs creative idea generation - Nature.com"

Post a Comment